Geospatial

- Earth Engine workflows

- DEM + hydrology layers

- Remote sensing indices

Time‑Series

- QA/QC + gap filling

- Seasonal/trend modelling

- Forecasting + uncertainty

Bayesian

- Hierarchical models

- Inverse modelling

- PPCs + priors

Machine Learning

- Supervised/unsupervised

- Feature engineering

- Model explainability

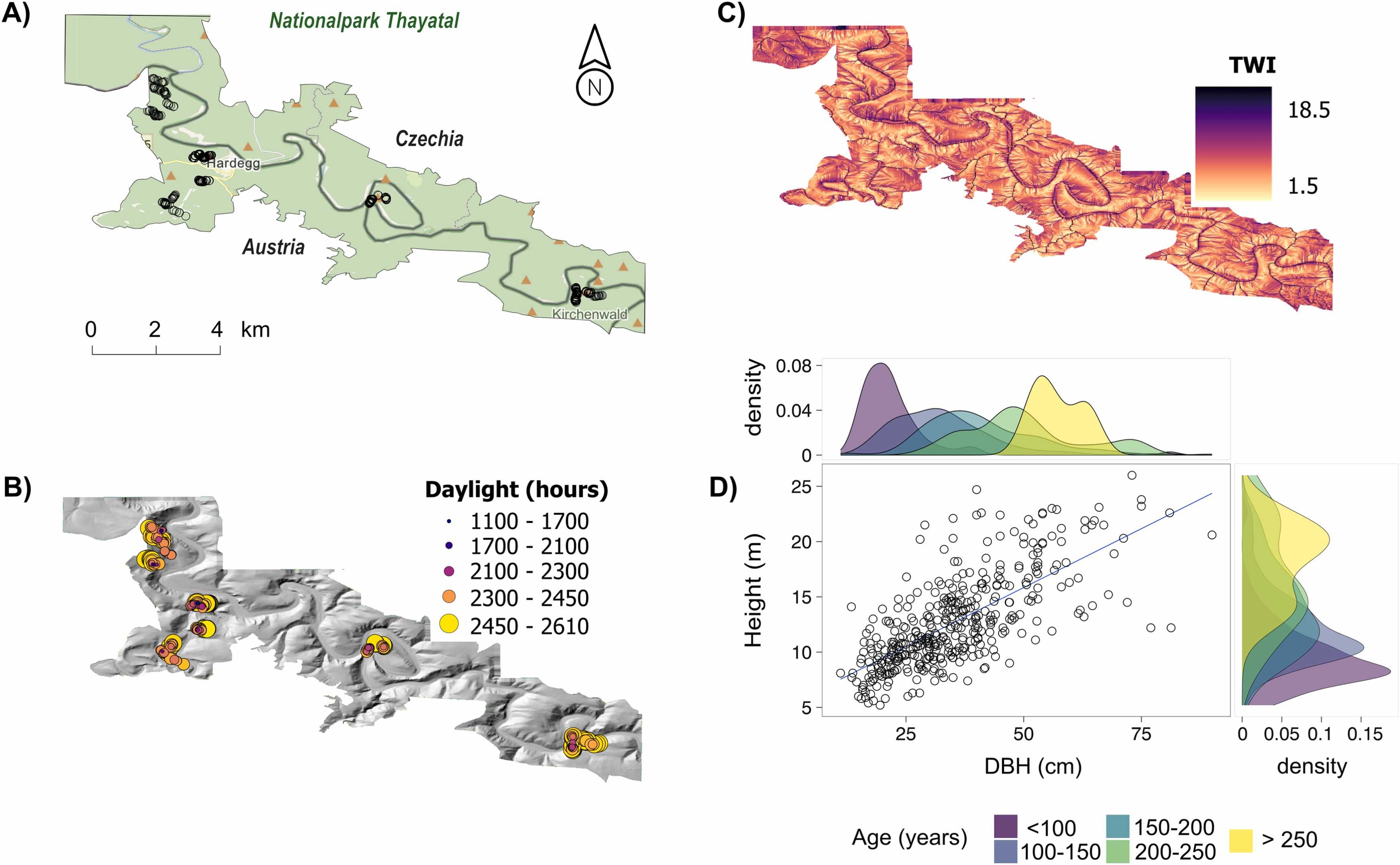

Geospatial Data

Modern environmental research utilizes remote sensing and satellite imagery. Google Earth Engine provides planetary‑scale analysis by combining a multi‑petabyte catalog of satellite imagery and geospatial datasets. We can use it to detect changes, map trends, and quantify differences on the Earth’s surface (earthengine.google.com).

I did bachelor degree in geospatial analysis to map forest types, estimate vegetation indices, and derive variables like the topographic, weather and climate parameters.

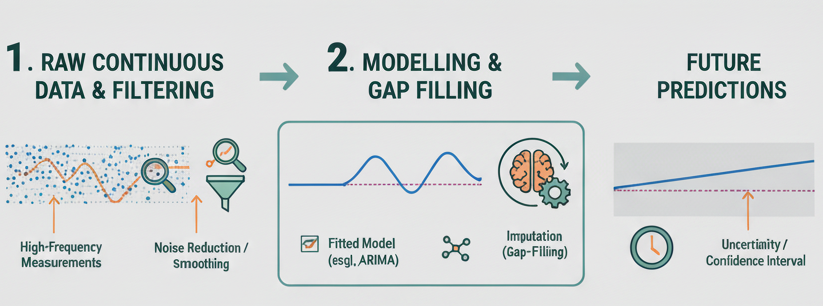

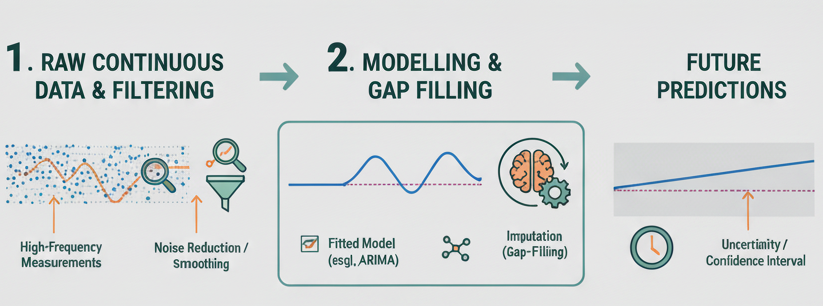

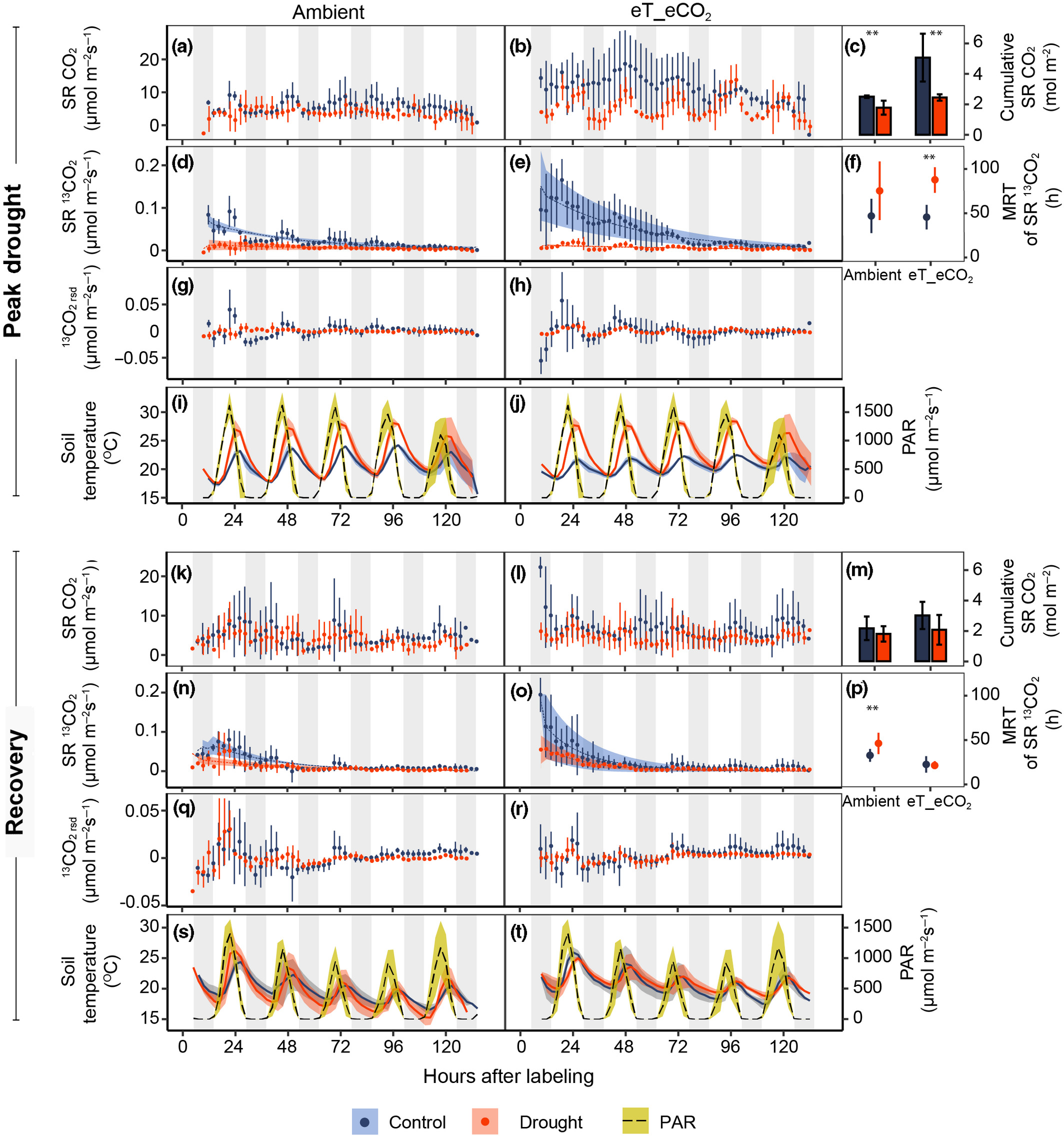

Time‑Series Analysis

Time‑series data are observations collected over time. In ecology, time‑series analysis uncovers patterns in population dynamics, species interactions, and ecosystem responses. Analysts examine autocorrelation, seasonality, and long‑term trends, using methods such as ARIMA, decomposition, and smoothing (example).

We apply models to greenhouse‑gas concentrations, fluxes, and isotope data: gap‑filling, diel/seasonal cycles, and emission forecasting.(example).

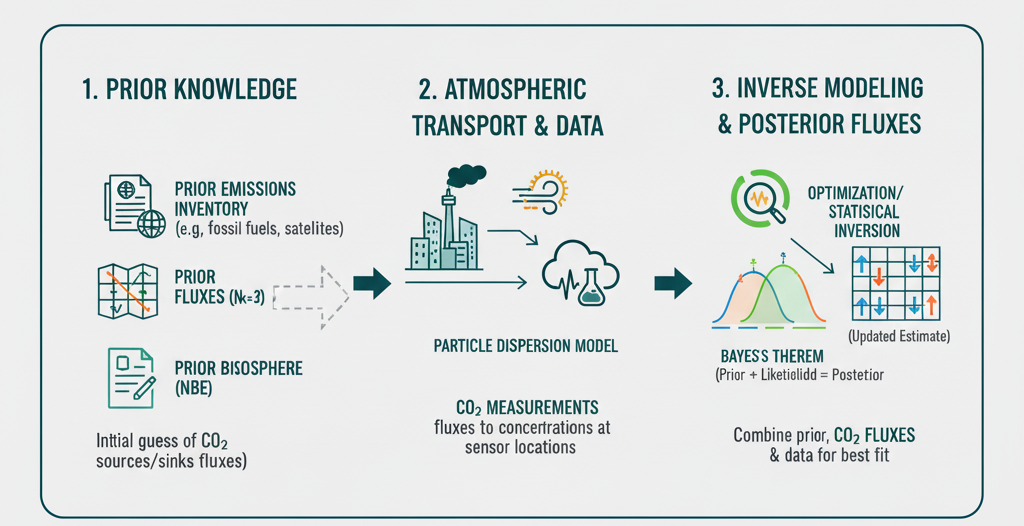

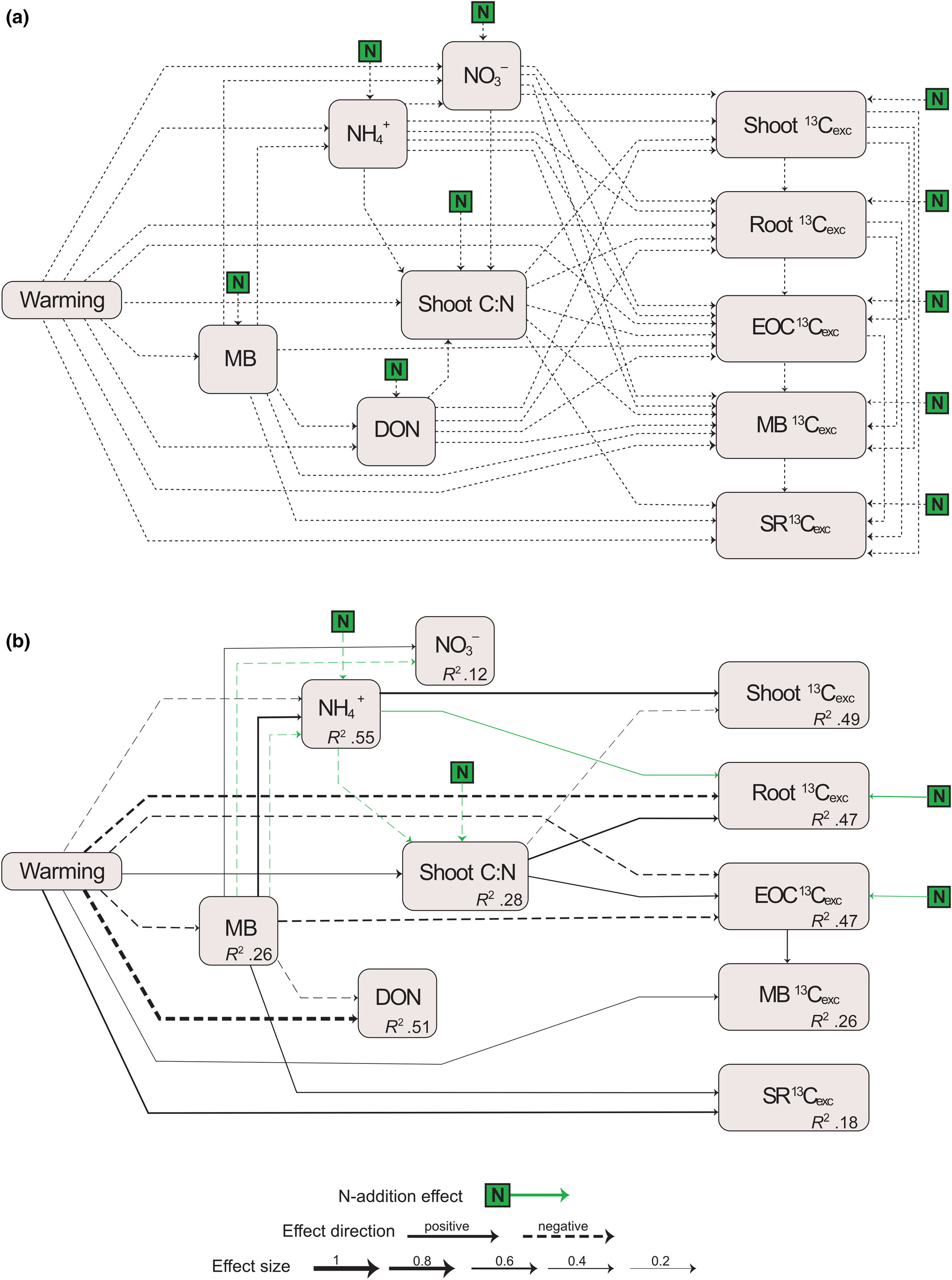

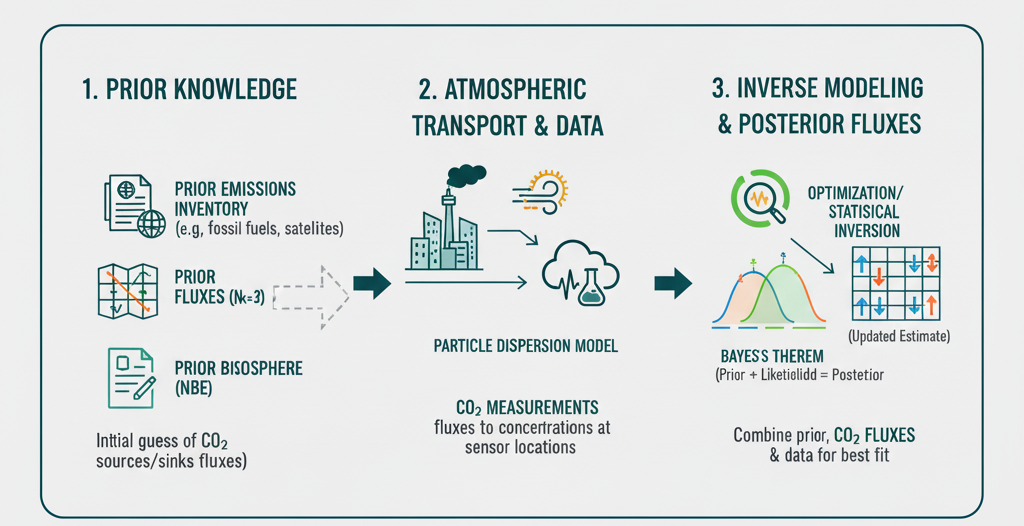

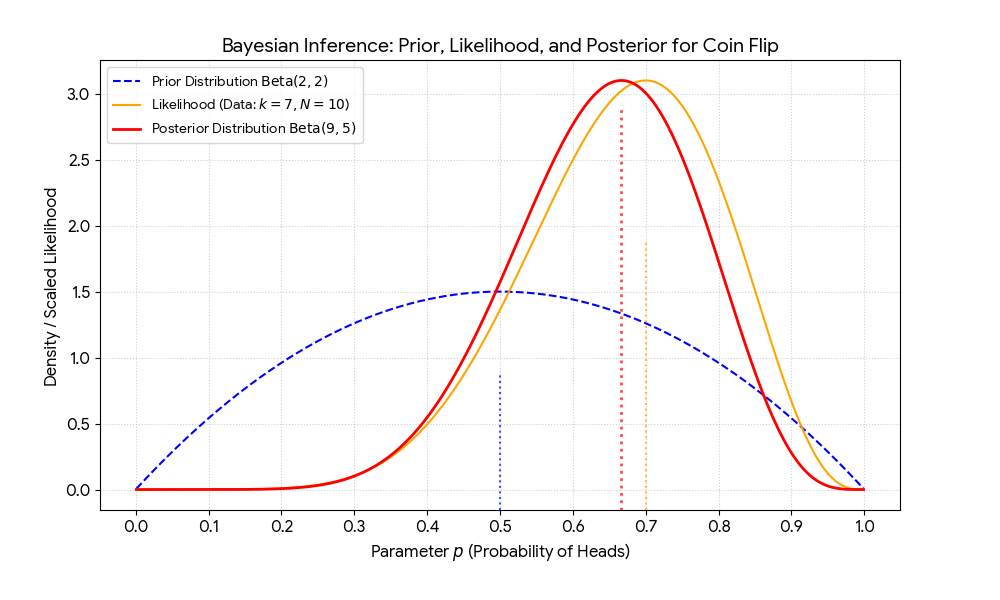

Bayesian Modelling

Bayesian statistics treats parameters as random variables and updates prior beliefs with observed data to obtain posterior distributions. This allows direct probability statements and inclusion of prior knowledge. Frequentist methods treat parameters as fixed and base inference on the likelihood of the data .

Bayesian techniques are especially useful for small samples or complex models; they provide intuitive uncertainty quantification. I use hierarchical models and inverse models to partition CO2 and CH4 sources with isotope constraints.

Machine Learning

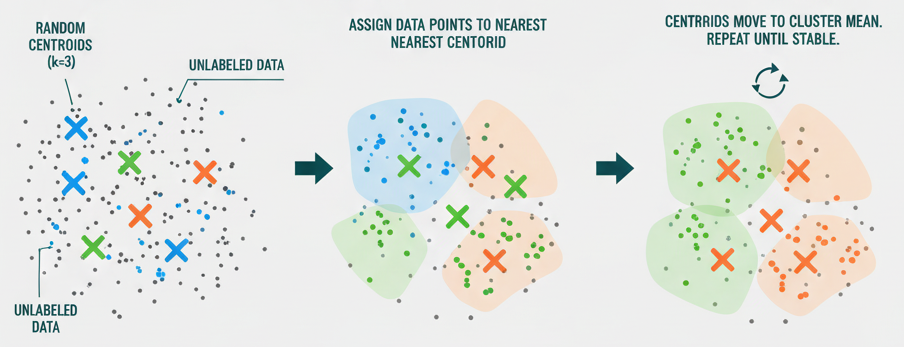

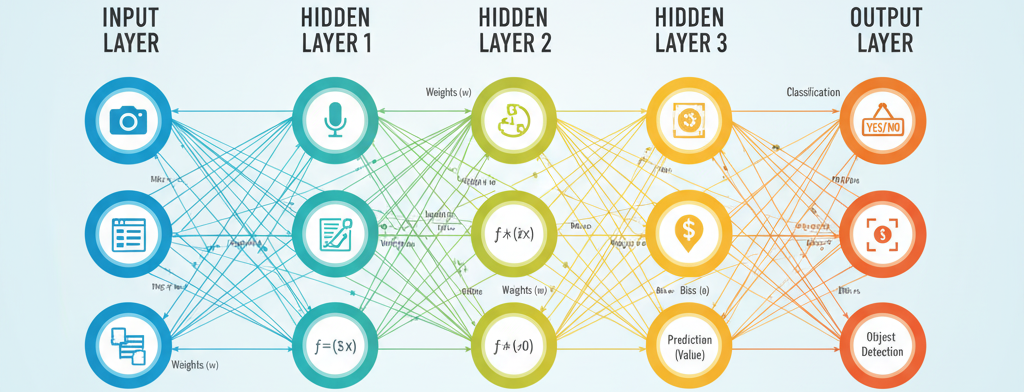

Machine learning enables pattern discovery and prediction. Random forests combine many decision trees via random sampling and feature selection; predictions are made by averaging or voting. Gradient boosting builds models sequentially, correcting previous errors; XGBoost adds regularisation. In unsupervised learning, k‑means partitions data into k clusters by assigning points to the nearest centroid.

We classify land cover from satellite data, predict tree growth responses to climate variables, and extract patterns from multivariate ecological datasets.

Workflow

Sensors, satellites, towers, chambers; metadata‑rich ingestion.

QA/QC, harmonisation, gap‑filling, feature engineering.

Bayesian inference, inverse models, ML ensembles.

Interactive visuals, uncertainty communication, reproducible outputs.